As a lecturer at the Hogeschool Utrecht, I teach Python to students who are mostly new to programming. Having recently restarted my attempts to learn Haskell, I can relate to students' feelings of being lost or overwhelmed. It takes me back to when I first started writing code. Not only does it give me the joy of learning something new, the process gives me insight in the things we take for granted when talking about code and learning a language: syntax, problem decomposition, patterns and approaches.

In this post, we explore the similarities and differences in imperative and functional approaches to accumulating values over a sequence using Python and Haskell.

For illustration purposes,

we are going to calculate the sum of a

sequence (list) of integers without using the

sum function in either language.

Accumulation through iteration

The accumulator pattern

is used to collect

information from a sequence and store the

result in a variable. Core to the idea is

that we initiate an accumulator variable

(in the following examples called acc)

and modify that variable when iterating over

the collection.

To calculate the sum of a list of numbers using the accumulator pattern, one would start counting at 0 and, for each number in the list, add said number to the accumulator value, updating the accumulator value in each iteration. This is an imperative way of thinking: instructions are written in a concrete, step-by-step fashion, modifying a program’s state during execution.

In Python, this sum implementation would look as follows:

def acc_sum(numbers):

acc = 0

for number in numbers:

acc = acc + number

return acc

result = acc_sum([1, 2, 3])

# Prints: 6

print(result)

Accumulation through recursion

Another way of accumulating values without modifying state can be found in recursion. Using recursion, a problem’s solution depends on solutions to smaller instances of the same problem.

There are three rules in order for a recursive function to be effective:

- The function must have a base case

- The function has a recursive case (also: inductive case) that moves towards the base case

- The function calls itself recursively

In imperative languages like Python, recursion can be accomplished by making a function call itself until some form of base case is encountered: a single value is returned instead of calling itself again.

def rec_sum(numbers):

# Base case

if numbers == []:

return 0

# Split head and tail

x, *xs = numbers

# Recursive case

return x + rec_sum(xs)

result = rec_sum([1, 2, 3])

# Prints: 6

print(result)

We check whether our base case is found

using an if-statement: if we encounter an empty list,

we return 0. If that is not the case, we continue to

the recursive case.

We then split up the input numbers into a head

(called x) and a tail (called xs).

Then, the result of adding x to the result of

(recursively) calling rec_sum on the tail, xs

is returned.

To be able to return a single value,

Python will perform the same operation on the

tail (adding the head to the application of the function

on that respective tail) until the base case is met.

The steps the interpreter will take

to resolve rec_sum([1, 2, 3]) recursively

looks somewhat like this:

rec_sum([1, 2, 3])

# Base condition not met: take head, call function on tail

1 + rec_sum([2, 3])

# Base condition not met: take head, call function on tail

2 + 1 + rec_sum([3])

# Base condition met: add 0 to all previous heads

3 + 2 + 1 + rec_sum([])

# Resulting expression

3 + 2 + 1 + 0

As you can see, the expression rec_sum([1, 2, 3]) is

resolved, step-by-step, into a resulting expression that

sums them all up: 3 + 2 + 1 + 0.

In Python, we need to use an if-statement to determine our base case. Haskell supports pattern matching, meaning we can define our base case in terms of a certain pattern to match against and our alternate case as another pattern. Pattern matching is a bit like function overloading in C-based languages like C, C++, C# and Java, but more powerful as it not only matches based on type but does some structural matching as well.

rec_sum :: [Int] -> Int

rec_sum [] = 0

rec_sum (x:xs) = x + rec_sum xs

main = do

-- Prints: 6

print $ rec_sum [1,2,3] 0

In Haskell, we start with a type definition to establish what our function should be doing: going from a sequence of integers to a single integer.

We define our rec_sum function twice. The

first definition is our base case: an empty list results

in 0. The second definition is our recursive case:

the input sequence is split up in

a single-value head (called x)

and a tail sequence (called xs).

We add our integer value from the head to

the result of (recursively) calling the same function

on the tail.

Haskell looks a lot cleaner when doing recursion than Python. This should be no surprise given Haskell’s functional nature.

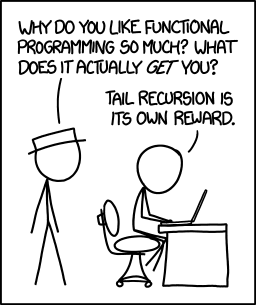

Tail recursion

One could imagine that recursion can be expensive with regards to memory, as the interpreter (or compiler) would need to perform a lot of consequent function calls that each operate on the call stack, building up a stack of calls to itself. This is why some programming languages have techniques in place that optimize some forms of recursion by making it look more like its imperative accumulator counterpart.

This can usually only be done when doing tail recursion,

meaning the last call (the tail call)

is the recursion.

In our case, the last operation is not our call to rec_sum,

but the + operation.

To make the recursion behave in a tail-recursive manner,

we can pass the accumulator into the function

on each call. This way, the accumulation happens

before each recursive function call.

Runtime or compiler optimizers can then

do tail-call optimization.

In Python, it would look like this:

def trec_sum(numbers, acc = 0):

if len(numbers) == 0:

return acc

return trec_sum(numbers[1:], acc + numbers[0])

result = trec_sum([1, 2, 3])

# Prints: 6

print(result)

However, be advised that Python does not optimize tail calls. This has been a deliberate choice by Guido van Rossum, primary author of Python, as he believes recursion to be unpythonic and tail recursion elimination (TRE) would come at the cost of debuggability. From a language design standpoint, this is understandable: although Python has some functional and declarative constructs, its main philosophy is imperative in nature.

Is it really such a big deal to rewrite your function to use a loop? (After all TRE only addresses recursion that can easily be replaced by a loop. :-)

Although Haskell optimizes in different ways because of the referential transparent properties that come with the purity of the language, we can define an explicit tail-recursive function following the same principle: make sure the function call is the last call that occurs within the function. It looks like this:

trec_sum :: [Int] -> Int -> Int

trec_sum [] acc = acc

trec_sum (x:xs) acc = trec_sum xs (x + acc)

main = do

-- Prints: 6

print $ trec_sum [1,2,3] 0

You can notice two things. First of all, we explicitly pass in the accumulator between calls. Secondly, the function’s type signature has changed. This is because we have to pass in the accumulator’s initial value every time. This can be mitigated by introducing another function that refers to the function with explicit tail-call-recursion:

rec_sum :: [Int] -> Int

rec_sum xs = trec_sum xs 0

trec_sum :: [Int] -> Int -> Int

trec_sum [] acc = acc

trec_sum (x:xs) acc = trec_sum xs (x + acc)

main = do

-- Prints: 6

print $ rec_sum [1,2,3]

As we have seen, recursion is a general pattern for breaking up a problem in pieces and introducing a function that can solve each piece by calling itself.

Accumulation through reduction

There is also a functional pattern that specifically tends towards cases of reducing a sequence of values to a single value. In some languages, this is called a reduction or reduce. In other languages this is known as a fold.

The reduce function is a higher-order function, meaning that it takes another function. It applies the given function on each value in order to accumulate a final resulting value.

Because Python is mostly imperative,

the reduce function has been hidden away in the

functools module.

As you can see in the example below,

the reduce function takes a combining function

and an iterable to apply the function on.

Optionally, it takes an

initializer value as a third argument.

from functools import reduce

def plus(num, acc):

return acc + num

def fold_sum(numbers):

return reduce(plus, numbers)

result = fold_sum([1, 2, 3])

# Prints: 6

print(result)

Alternatively, a nameless function can be used. This is called a lambda function, after the branch of mathematics on which functional programming is based. This looks as follows in Python:

from functools import reduce

def fold_sum(numbers):

return reduce(lambda num, acc: num + acc, numbers)

result = fold_sum([1, 2, 3])

# Prints: 6

print(result)

Finally, you can refer to the plus operator in Python instead of defining the operation manually. Using the operator module, one can refer to an operator as if it were a function. This reads quite clearly: reduce all numbers by adding them together.

from functools import reduce

from operator import add

def fold_sum(numbers):

return reduce(add, numbers)

result = fold_sum([1, 2, 3])

# Prints: 6

print(result)

Wrapping the reduce in a function like fold_sum has

no real value in this case if one can assume

knowledge about what reduce means.

In Haskell, a fold is used to “process a data structure in some order and build a return value.” It takes a combining function, an initial value and a data structure and combines the values in that data structure when evaluated.

fold_sum :: [Int] -> Int

fold_sum = foldl (+) 0

main = do

-- Prints: 6

print $ fold_sum [1,2,3]

Types of folds

Fundamentally, there are two types of folds: left folds and right folds.

A left fold combines all elements until the last with the last (left associativity), while a right fold combines the head with the results of combining the tail (right associativity):

-- Left fold: (((1 + 2) + 3) + 4) + 5

foldl (+) 0 [1,2,3,4,5]

-- Right fold: 1 + (2 + (3 + (4 + 5)))

foldr (+) 0 [1,2,3,4,5]

For commutative operations, operations for which the order does not matter, it does not matter whether you use a left or right fold. For non-commutative operations, like subtraction or division, it does:

-- Left fold: (((1 - 2) - 3) - 4) - 5

-- Results in: -13 (-8 - 5)

foldl (-) 0 [1,2,3,4,5]

-- Right fold: 1 - (2 - (3 - (4 - 5)))

-- Results in: 3 (1 - -2)

foldr (-) 0 [1,2,3,4,5]

Just so you know, Python’s reduce is a left fold.

from operator import sub

# (((1 - 2) - 3) -4) - 5

result = reduce(sub, [1, 2, 3, 4, 5])

# Prints: -13

print(result)

We have seen that Haskell has a right fold (foldr) and a left fold (foldl).

There is also foldl' (left fold prime).

The use of foldl' has

to do with strict operator application

and efficiency in relation to Haskell’s

lazy evaluation.

Basically, foldl' is a left fold that prevents stack

overflows because it reduces the chain of calls

early instead of keeping a large call stack

and resolving it in the end.

More information on folds can be found on the

Haskell wiki.

Declarative programming

Notice that functional constructs like reduce are more declarative than their imperative counterparts. Instead of stating each individual instruction, you express your intention and the compiler or runtime will figure it out: reduce the list numbers to a single number by adding them together. By using a common language to solve problems that are similar in shape, you can use solutions that are similar in abstraction – like design patterns. These abstractions are composable in nature and ideal for reuse.

This results in less code, but requires familiarity with these higher-level concepts in order to understand it. The cognitive load of stepping through detailed steps in imperative solutions is traded against prior knowledge of common abstractions in more functional approaches. In other words: the “complexity” shifts from what is on the screen to what concepts you know.

I think it is too bad that Python hides away the reduce function in favor of a more imperative approach to accumulation. That being said, Python does have list, set and generator comprehensions. Comprehensions are a declarative way of programming also found in functional languages like Haskell. They are useful to create a new sequence based on an existing sequence and applying a transformation and/or a filter or selection to it. However, comprehensions cannot easily be used to accumulate values, so one of the above patterns would still be necessary. Perhaps we will check out comprehensions in a later post.

In conclusion

Accumulating a single value based on a sequence of values can be done via the imperative accumulator pattern or through functional patterns like recursion or folds.

Functional constructs can be applied to achieve a more declarative style: state your intent and the compiler or runtime will follow. However, this requires a familiarity with these higher-level concepts on the side of the developer.

As we have seen, although functional programming is doable, Python is not really a functional language. It is imperative in nature and avoids typical functional concepts like recursion and folds because of it being “unpythonic” and because of the lack of tail-call optimisation.

Thoughts?

Leave a comment below!